【译】OpenStack 虚拟机创建的请求流程

翻译自原文: Request Flow for Provisioning Instance in Openstack

不过该网站已经无法访问了,可能是作者已经不维护了。有点可惜。

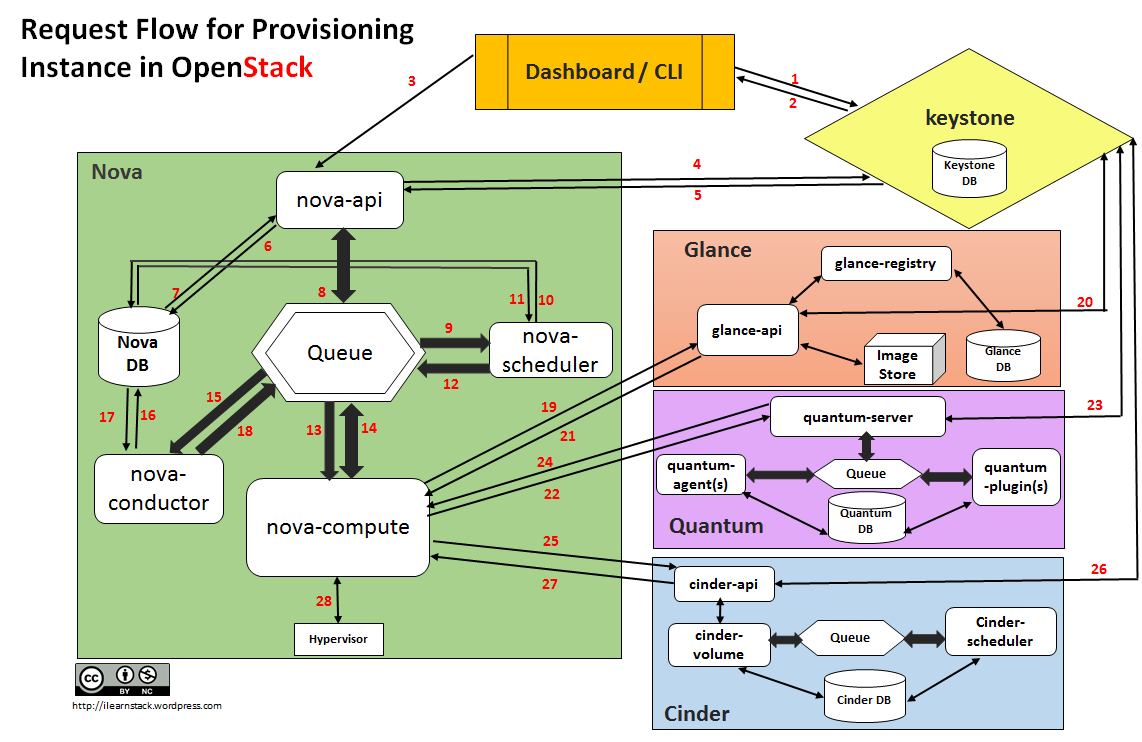

任何云中最重要的用例之一就是配置虚拟机。在本文中,我们将介绍一个基于Openstack云的实例(VM)。本文讨论Openstack下各种项目的请求流程和组件交互。最终结果将启动一个虚拟机。

虚拟机创建过程涉及到了 OpenStack 中各个子模块之间的交互:

CLI命令行解释器:用于向 OpenStack Compute 提交命令。

Dashboard Horizon:为 OpenStack 服务提供一个人机交互界面

计算 Nova:从 Glance 中获取虚拟机镜像(image),依附主机模板(flavor)和相关元数据(metadata)和转换用户的API请求到正在运行的虚拟机当中。

网络 Neutron:为计算节点提供虚拟化网络服务,允许用户创建自己的网络并将它们跟虚拟机关联起来

块存储 Cinder:为计算节点提供持久存储服务

虚拟机镜像 Glance:存储虚拟机镜像

身份验证 Keystone:为 OpenStack 所有服务提供身份验证和授权服务

消息队列 RabbitMQ:处理 OpenStack 各模块(如 Nova、Neutron、Cinder)之间的内部通信

创建一个虚拟机的请求流程大概是这样子的:

- Dashboard 或者 CLI 接收到了用户的身份验证信息,并将这些信息以 REST 调用的方式发送到 KeyStone

- KeyStone 对信息进行验证,同时生成和返回授权 Token,这个 Token 将会用于向 OpenStack 中其他模块以 REST 调用方式发送请求

- Dashboard 或 CLI 将创建虚拟机的类似 “Launch Instance” 或者 “nova-boot” 的请求转化为 REST API,并将其他模块以 REST 调用方式发送请求

- nova-api 接收到请求,并向 KeyStone 发送验证 Token 和访问权限的请求

- KeyStone 验证 Token,并返回更新的包含角色(role)和权限的确认

- nova-api 与数据库 nova-database 进行交互

- 在数据库中为虚拟机创建一条记录

- nova-api 向调度器 nova-scheduler 发送一个 RPC 请求,期望获得更新的包含主机ID的虚拟机入口 (entry)

- nova-scheduler 从消息队列中提取请求

- nova-scheduler 与 nova-database 进行交互,通过过滤条件和权重,找到一个可用的主机 ID

- nova-scheduler 返回更新后的包含主机ID 的虚拟机入口

- nova-scheduler 向 nova-compute 发送 RPC 请求,以在可用的计算节点上启用虚拟机

- nova-compute 从消息队列中提取请求

- nova-compute 向 nova-conductor 发送 RPC 请求,以获取虚拟机信息,例如主机 ID、主机模板(Ram、CPU、磁盘)

- nova-conductor 从消息队列中提取消息

- nova-conductor 与 nova-database 进行交互

- 返回虚拟机信息

- nova-compute 从消息队列中提取虚拟机信息

- nova-compute 通过传递 token 向 glance-api 发送 REST 请求,以通过镜像 ID 从 Glance 中获得镜像 URL 和从镜像存储池中上传镜像

- glance-api 向 keyStone 验证 Token

- nova-compute 获得镜像元信息(metadata)

- nova-compute 通过传递 token 向 Network 发送 REST API 请求分配和配置网络资源,为虚拟机分配 IP

- neutron-server 向 KeyStone 验证 Token

- nova-compute 获得网络信息

- nova-cimpute 通过传递 Token 向 Volume 发送 REST API 请求为虚拟机分配存储资源(块存储)

- cinder-api 向 KeyStone 验证 Token

- nova-compute 获得块存储信息

- nova-compute 为 监控器(hypervisor)驱动生成数据并在其上执行请求 (通过 Libvirt)

下表展示了虚拟机创建过程中在不同步骤下的状态:

| Status | Task | Power state | Steps |

|---|---|---|---|

| Build | scheduling | None | 3-12 |

| Build | networking | None | 22-24 |

| Build | block_device_mapping | None | 25-27 |

| Build | spawing | None | 28 |

| Active | none | Running |

https://github.com/openstack/nova/blob/master/nova/compute/manager.py 的大约 2449 行可以看到 虚拟机创建流程。(第 19 步之后)

| |

以下函数在 self._build_resources 中

- 创建网络

| |

- 创建块设备

| |

- 创建 image,然后创建domain

| |

以下在 nova/virt/libvirt/driver.py , 是 libvirt 的创建虚拟机的实现、除 libvirt 以外,还有 vmwareapi 等。其内部实现是借用了 libvirt python sdk 操纵libvirt。首先生成对应的 domain XML 文件,再将 XML 文件传递给 SDK。

| |